akarchmer0@gmail.com - LinkedIn -

Google scholar - X

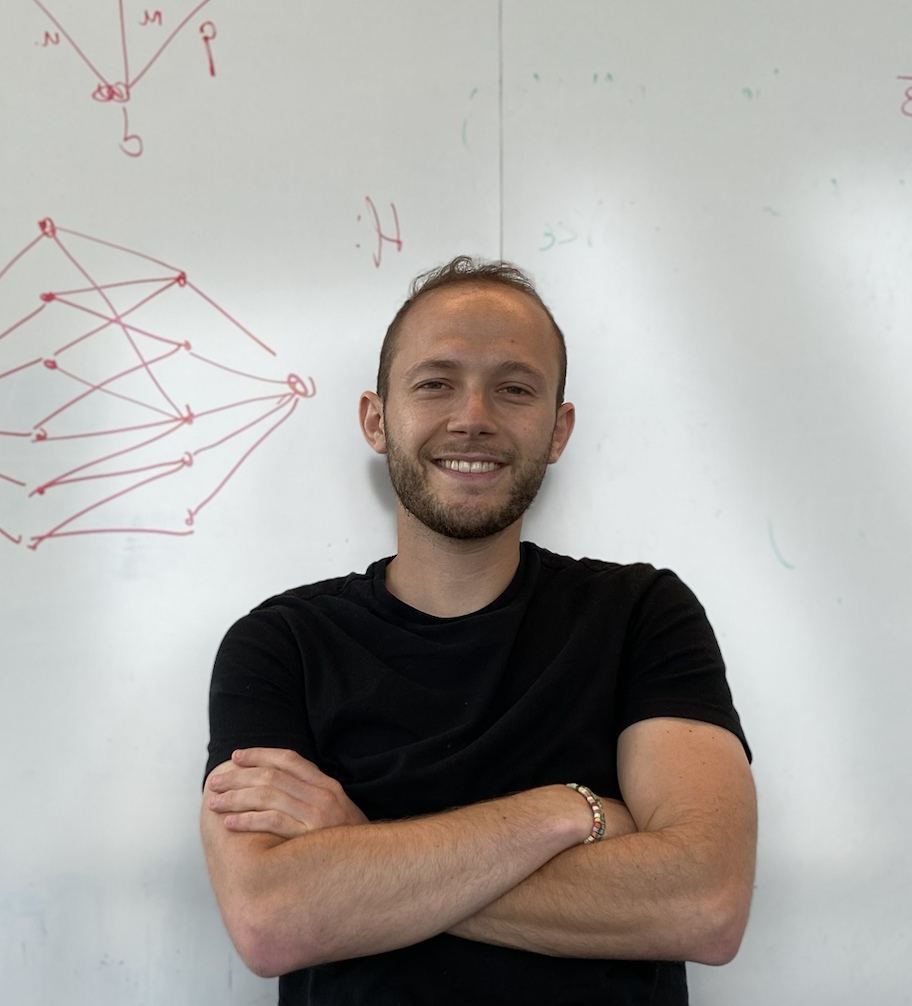

I'm a member of the Machine Learning Research team at Morgan Stanley. Previously, I was a postdoc at Harvard University, where I was hosted by Seth Neel. I obtained my Ph.D. from Boston University under the supervision of Ran Canetti in spring of 2024.

I'm interested in Machine Learning, AI, and Theoretical Computer Science. I've worked on research problems in a variety of areas, including:

berkeley cafe museum

new york city cafe museum

I'm a member of the Machine Learning Research team at Morgan Stanley. Previously, I was a postdoc at Harvard University, where I was hosted by Seth Neel. I obtained my Ph.D. from Boston University under the supervision of Ran Canetti in spring of 2024.

I'm interested in Machine Learning, AI, and Theoretical Computer Science. I've worked on research problems in a variety of areas, including:

- Machine Learning theory, especially complexity separations (e.g., random features vs. deep learning with gradient descent, multimodal vs unimodal learning)

- Machine Learning interpretability, including data attribution and verifiability of attribution

- Computational Learning and Complexity theory, especially meta-complexity and the relationship between circuit lower bounds and computational learning theory (my thesis)

- Cryptography and Machine Learning security, including the model stealing problem and "Covert Learning"

berkeley cafe museum

new york city cafe museum

Refereed Publications

- Efficiently Verifiable Proofs of Data Attribution. NeurIPS 2025 (to appear).

Ari Karchmer, Martin Pawelczyk and Seth Neel.

ArXiv preprint. - The Power of Random Features and the Limits of Distribution Free Gradient Descent. ICML 2025.

Ari Karchmer and Eran Malach.

ArXiv preprint. - On Stronger Computational Separations

Between Multimodal and Unimodal Machine Learning. ICML 2024.

Ari Karchmer.

ArXiv preprint. "Spotlight" paper (3.5% acceptance rate). - Agnostic Membership Query Learning with Nontrivial Savings: New Results and Techniques. ALT 2024.

Ari Karchmer.

ArXiv preprint. - Distributional PAC-Learning from Nisan's Natural Proofs.

ITCS 2024.

Ari Karchmer.

ArXiv preprint. Winner of best student paper award.

- Theoretical Limits of Provable Security Against Model Extraction by Efficient Observational Defenses. SaTML 2023.

Ari Karchmer.

IACR ePrint. -

Covert Learning: How to Learn with an Untrusted Intermediary. TCC 2021. (ab)

Ran Canetti and Ari Karchmer.

IACR ePrint.